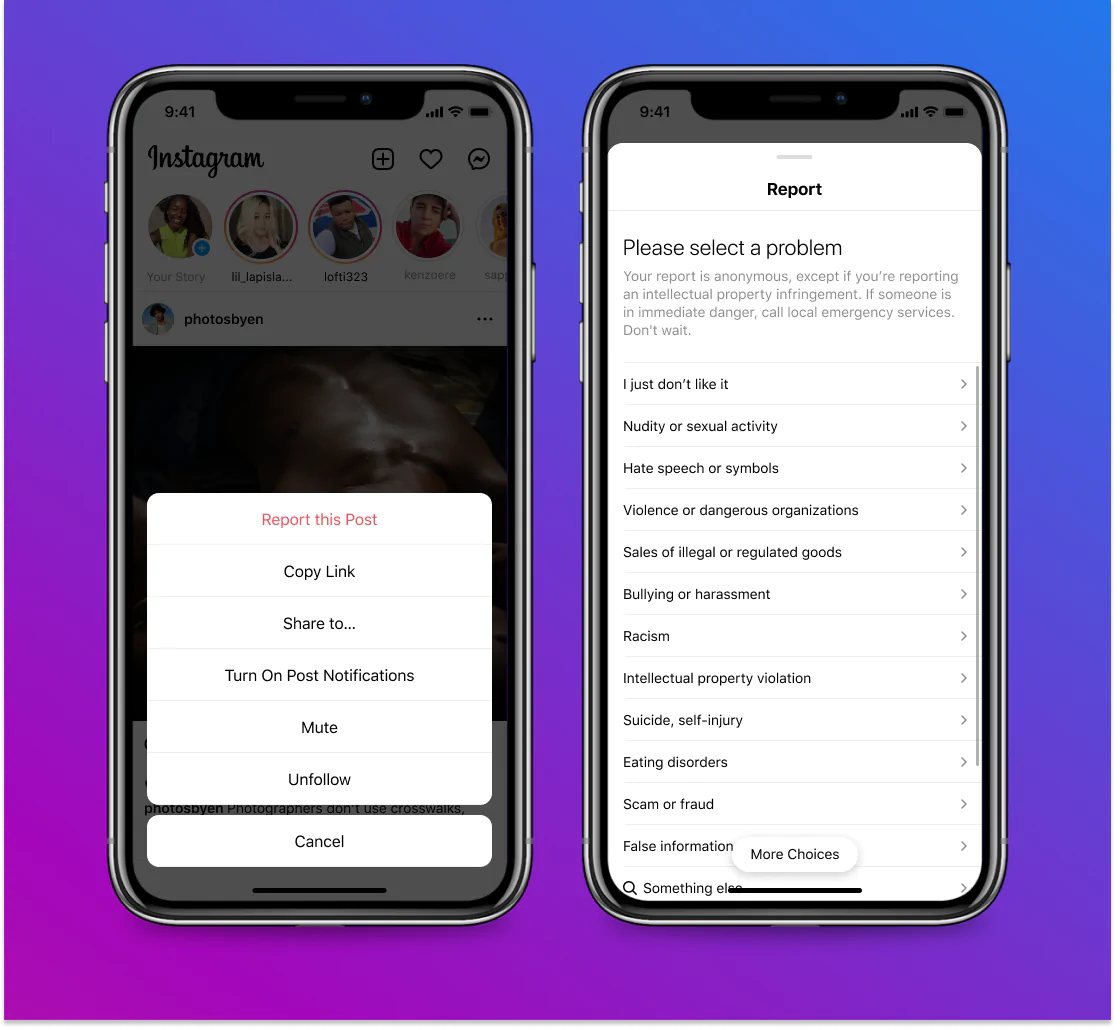

In some cases, social networks can also be very dangerous, especially for less experienced users or for younger ones and the team of Instagram announced that he had studied new solutions to give less visibility to potentially dangerous content.

According to as disclosed from the popular social network, the algorithm that manages how posts are sorted in user feeds and Instagram Stories will give less priority to content that may contain bullying and incitement to hatred or violence.

Instagram declares war on bullying, hatred, and violence

Although Instagram’s rules already prohibit most of this type of content, such a change could affect limited posts or those that haven’t yet reached the app moderators.

One of the systems studied by the social network giant to quickly identify dangerous contents and hide them is based on a kind of similitude principle, ie the posts deemed “similar” to those that have been previously removed will be much less visible even for followers.

Additionally, Instagram points out that if its algorithms predict that a user is likely to report a post based on their content reporting history, that post will show further down in their feed.

This important change follows the similar one introduced in 2020 when Instagram began to downgrade accounts that shared incorrect information after a check by the team of fact-checkers but the social giant specifies that this time only individuals will be “punished” post and “not accounts in general”.

The social network giant has confirmed that potentially harmful posts can still be removed if they break the guidelines of the community.

The Instagram app for Android can be downloaded from the Google Play Store with the following badge: